Google demonstrates gesture control using radar

Using semiconductor devices from Infineon, two prototypes of products controlled exclusively through gestures were demonstrated at a “Google I/O” event on May 20th 2016. The two products, a smartwatch and a wireless speaker, can both recognize gestures to replace physical switches or buttons

Infineon and Google ATAP aim to address numerous markets with “Soli” radar technology. Among these are home entertainment, mobile devices and the Internet of Things (IoT). Radar chips from Infineon as well as Google ATAP’s software and interaction concepts form the basis of the control mechanism. Both companies are preparing for the joint commercialization of “Soli” technology.

“Sophisticated haptic algorithms combined with highly integrated and miniaturized radar chips can foster a huge variety of applications,” said division President Urschitz.

“Gesture sensing offers a new opportunity to revolutionize the human-machine interface by enabling mobile and fixed devices with a third dimension of interaction,” said Ivan Poupyrev, Technical Project Lead at Google ATAP. “This will fill the existing gap with a convenient alternative for touch- and voice controlled interaction.”

In addition to their efforts in the audio and smartwatch markets, the developers’ ambitions are more comprehensive: “It is our target to create a new market standard with compelling performance and new user experience, creating a core technology for enablement of augmented reality and IoT,” said division President Urschitz.

While Virtual Reality technologies could already visualize new realities in the past, users could not interact with these so far. The 60 GHz radar application developed by Google and Infineon bridges the gap, as a key technology enabling Augmented Reality.

For the first time ever, two products for end users have been presented that can be precisely operated – by hand movements alone. Google and Infineon will begin joint marketing of the underlying technology commercially in mid 2017

Gesture control represents the key technology that will be needed to achieve a real breakthrough in augmented reality.

There are lots of approaches to using augmented reality for fusing physical and simulated reality. But what’s been missing till now is a fast, intuitive transmission of commands to the computer. To date, much of the focus has been on touch sensitive touchscreens. These, however, mean the user needs to be in constant “close contact” with the computer. On the other hand, there’s speech recognition – this allows greater flexibility, but it’s largely limited to individual users.

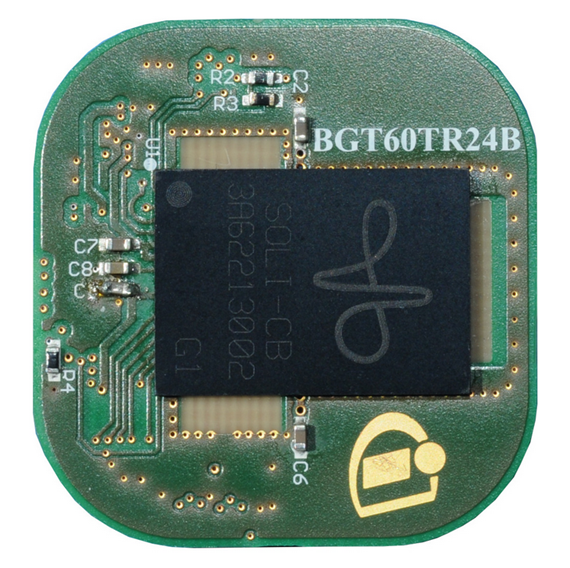

Gesture-based control of devices by hand signals closes this gap. Consequently, gesture control opens up the 3rd dimension, breaking free of the two-dimensional user interface. This technology was developed by Google and Infineon over the last few years under the internal name “Soli”. A 9 x 12.5 mm radar chip from Infineon sends and receives waves that reflect off the user’s finger. Fine hand movements, like winding a watch, can be detected at a distance of up to 15 meters. Just a few decades ago it took a parabolic antenna with a 50 m diameter to do what the chip’s technology can do today.

The chip is made by Infineon in Regensburg, Germany. It operates with the algorithm developed by Google’s ATAP (short for Advanced Technology and Projects Group). The combination is used in the smartwatch from LG and the JBL wireless speaker. Beginning in mid 2017, both companies will co-market the hardware and software as a single solution.