The “wallet” in the modern sense of “flat case for holding paper currency” dates back almost 200 years. The word itself goes back 700 years, and the concept (minus paper currency) for millennia.

Leather wallets were not “smart,” of course; they were atom agnostic, payment type agnostic, even, as credit cards and the like started proliferating in the mid 20th century. But today the payment type is almost a pointer — in computer science vernacular — to a source of money. And the wallet itself is the master pointer, used for opening and closing a transaction, and choosing which sub-pointer to assign.

Because intercepting the payment leads to a whole downstream treasure of goodies, the wallet — once tanned animal hide — is going to be the ultimate financial platform. As digital wallets increasingly become the origination point for consumer spending, they will become THE platform for downstream financial services — creating an opportunity for startups and a problem for established players.

The problem, of course, is that a payment type can become a wallet, and a wallet can become a payment type. So which is which? If a ridesharing company has 100 million credentials, they’ve solved half of the network effect problem of being a payment company — so you could imagine using that app as your wallet at, say, Walmart. Or Starbucks, which is one of the biggest wallets, has a pointer within its wallet to Visa Checkout, another wallet, pointing to a card type (a Visa card, or even a MasterCard/Amex/Discover card), pointing to a “loan” (the “credit” part of a credit card), ultimately pointing to a bank account.

As a stack, we have hardware — your mobile phone — at the top and bank accounts holding the actual treasure at the very bottom. But it’s better to think of this “stack” as really a system of pointers, in this case downwards. And the goal for businesses is finding and occupying a defensible position in this stack that allows them to intercept payments, capturing and controlling value to become that ultimate financial platform.

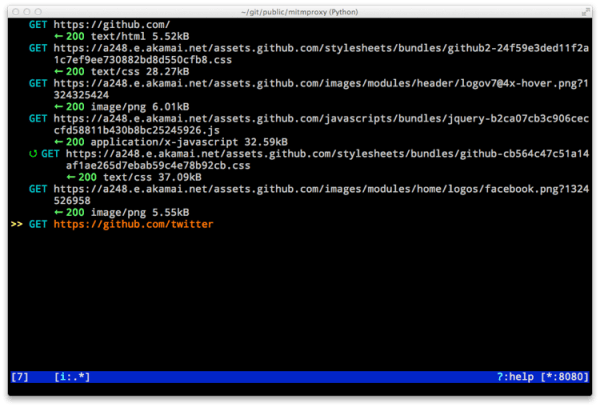

On my Apple iPhone, I can run the Uber app and pay via PayPal, which deducts the money from my American Express Card. For the first four components of the stack — hardware, operating system, app, and cloud backbone — the heuristics of success for capturing value are the number of integrations (i.e., the number of places people can use the wallet) and number of credentials (users who have committed more than one tender type).

Given the massive number of credentials they have and the controlling position as the “start” of the stack — unlike other players, Apple has both hardware and OS — Apple’s wallet as platform could deal a crippling blow to everyone down the stack.

Especially because the flow in this stack only goes one way: players below don’t get access to the resources up the stack.

In a mobile-only world, a well-coordinated effort to let you simply touch your thumb to your iPhone to pay on any ecommerce site or app could wipe out probably 20% of PayPal’s revenue, overnight [two-thirds of PayPal revenue is merchant services off eBay; assume 25% iOS share].

The real value of occupying a defensible place in this stack is not even in processing the payment, however. Capital One spends a lot of money every year convincing you to apply for and use its credit card. Once subsumed under a digital wallet, though, that “usage” component gets further and further out of Capital One’s control, with tremendous implications on downstream interest (lending) revenue.

One change in Apple’s product design — for example, something as simple as alphabetization, which a leather wallet doesn’t do! — could move Bank of America ahead of Capital One as a “default,” moving more purchases in that direction.

The “end result” of this whole system of pointers is usually an increasing balance on a revolving credit facility — a credit card. Take LendingClub and Prosper, the two biggest marketplace lending companies.

About half of LendingClub’s loan originations come from refinancing credit card debt, which they source via U.S. Postal Service mail ads, Google ads, etc. But controlling a position in the purchase stack could and arguably should replace their normal customer acquisition process; rather than waiting for a consumer to accrue a large balance from a series of purchases (at a ridiculously high credit card interest rate) and then refinance, catch it as the balance comes in from purchases.

The next large consumer finance company is likely to interrupt this chain of pointers. But at which point in the stack? It will be very challenging to attack the top of the stack as that would require a hardware+OS wallet with massive adoption and a massive number of payment credentials. And attacking the bottom of the stack is challenging as well …and relatively unprofitable at that.

Right now, LendingClub will take your 18% APR Chase/Citi/et al interest rate and refinance it down to 10%. But in a world where ApplePay controls the front and existing banks like WellsFargo provide the source of funds at the end, there’s no reason not to “automate away” the credit selection process. Why wouldn’t they just skip right to the rate LendingClub would have given you, or even skip to the best “marketplace lending” rate?

The biggest dislocation once that happens will be that your “credit card” will no longer be the default source of medium/long duration credit. This has major implications for all of consumer finance.

The future: Wallet apps, rewards, insights

For credit card companies, the smartest thing they can do is to not build their own cloud wallet, which creates an unnecessary “sub-pointer”. Yet many of them are doing this because they’re missing the full view of where value lies in the stack and how to better leverage their position within it.

For cloud wallets — which are facing the existential challenge of being caught, literally, in the middle — the smartest thing they can do is align themselves with a winning application wallet if they’re losing in acquiring enough credentials on their own. Because payment companies (e.g., Chase, Citi, etc.) risk being abstracted into irrelevance, attaching themselves to the winning application wallets (the likes of Amazon, Lyft, Starbucks, Uber, etc.) is one of the only ways to prioritize their existing “pointer” vis-a-vis others.

So what does this all mean for startups? Well, it will become easier to address the most profitable part of the stack — lending — without getting into the herculean and quixotic path of payments. Companies like PayByTouch over 8 years ago and Powa more recently together evaporated over $500M before dissolving into bankruptcy. Today, there are many other parts of key infrastructure that can be rewritten with access to digital wallet-as-platform: rewards, PFM (personal financial manager, à la Quicken/Mint), merchant recommendations, offers, etc.

There is also a whole generation — millennials — who don’t understand the notion of balancing a checkbook because they don’t even have a checkbook.

It’s an anachronism, as are the PFMs that grew up around that notion, including long delays before purchases show up in “modern” PFMs.

This is because the purchase goes from merchant, to merchant bank, to network, to issuing bank, to aggregator, to PFM. But in digital wallets, purchases show up instantly — allowing recommendations, offers, and discounts to be instantaneous. Digital wallets may finally enable the long-sought “taste graph” (the mother of all “people who bought this, also bought that”) to be built.

All of this will require the “top” of the stack to open up — for Apple, Google, and other players to recognize they are building a financial platform, which like all platforms are most valuable when developers have access. Given the size of the market, it’s a question of when, not if, this will happen. And once it does, it’s likely to be a game changer for the banks that for decades have relied on branches and consumer branding, and for startups who will finally find themselves with a capital-efficient entry point for disrupting consumer finance.